The Transformer Paradox: Overtrained but Not Overfit

·35 words·1 min

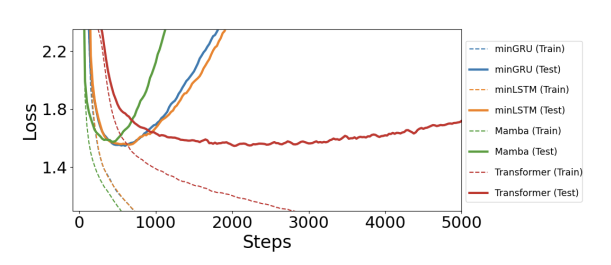

There is something about transformer architecture whereby even a ton of overtraining doesn’t cause significant overfitting.

No other architecture features this property. Most ML folks had assumed that was simply not possible.

Source: https://arxiv.org/abs/2410.01201